Toxic Anthropomorphism

Ever had a conversation with your car? That was probably harmless, but with tech "talking to us like people," we are blurring the line between "thing" and "person" to a toxic level.

My first car was a ______________. No, I'm not going to tell you what it was, that's the most important security question for all of my financial accounts and at least two social media logins.

OK, I kid. But to the point, I have fond memories of that utilitarian hunk of metal. I'd talk to it. I'd negotiate with it. I'd beg it to make it to the next gas station because I was stretching my fuel economy luck. I'd praise it for surviving that mishap down the hill when we didn't quite make the corner on a sub-zero Milwaukee morning. I treated it like, well, if not a person perhaps a pet. In reality that car was nothing special - it was a 1980's Chrysler product, which means it had the exact same 2.2L engine that every other Chrysler product had for about a decade, and it was an economy car that Dad handed down to me. Dad was pretty frugal about his car purchases - even from new he bought this one with:

- No radio

- No AC

- No power windows

- Manual transmission

- No passenger side mirror (which was oddly legal at the time)

- No rich Corinthian leather, despite what Ricardo Montalban said

- No anything else he could buy it without

But still, somehow, I anthropomorphized that car. Easily. It couldn't communicate in any way even approaching your average pet dog or cat, yet we had conversations. It had no feelings, but I imagined a personality for it. It never showed affection or dislike, but I somehow imagined it had such proclivities anyway.

I KNOW I'm not alone in this, and I know it was happening with all sorts of objects from pet rocks through to, well, nearly anything, and that we accepted this as something "well adjusted people" do/did.

Enough About 80's Tech...

So lets speed past about 40 years of innovation (yes, I'm old, we've established that before) to the tech we have today:

- Pocket computers disguised as phones

- Computers disguised as cars

- Digital assistants we talk to built into our phones, speakers, and even TV remotes

- Generative AI LLMs that we prompt via "plain language" instead of code

- Ubiquitous tech with cameras, microphones, displays, and speakers that make human interaction happen on the devices listed above

- Internet connected appliances

If we were that able to anthropomorphize things that couldn't "interact" with us, what chance to we have against stuff that we talk to, or that become the interface to our interactions with other human beings to the point of remote-presence?

OK, So Why Is That "Toxic?"

Setting aside the psychological concerns of getting caught up in the idea that devices are beings - that I'm not qualified to really talk about anyway - I'll stick to topics that are relevant to privacy and cybersecurity.

Absolute Faith In "AI" Content

We've been conditioned that computers are always right, all the way back to the abacus computational devices give the "correct" answer based on their input. If the computer is "wrong" it is because we didn't give it the right information in the right way. But now the computer is using LLM content to answer us, and that no longer follows the time-honored tradition that computers are "logical," because the computer is no longer using a fixed database or fixed math functions to generate an answer, it is using the flotsam and jetsam of content available on the Internet, and we all know how everything on the Internet is completely true, right?

So now that we're having "conversations" with our computers we need to treat them more like Uncle Conspiracy Nut when we talk to them, and a bit less like the repository of all facts. A toxic trait to watch for.

Cybersecurity and Access

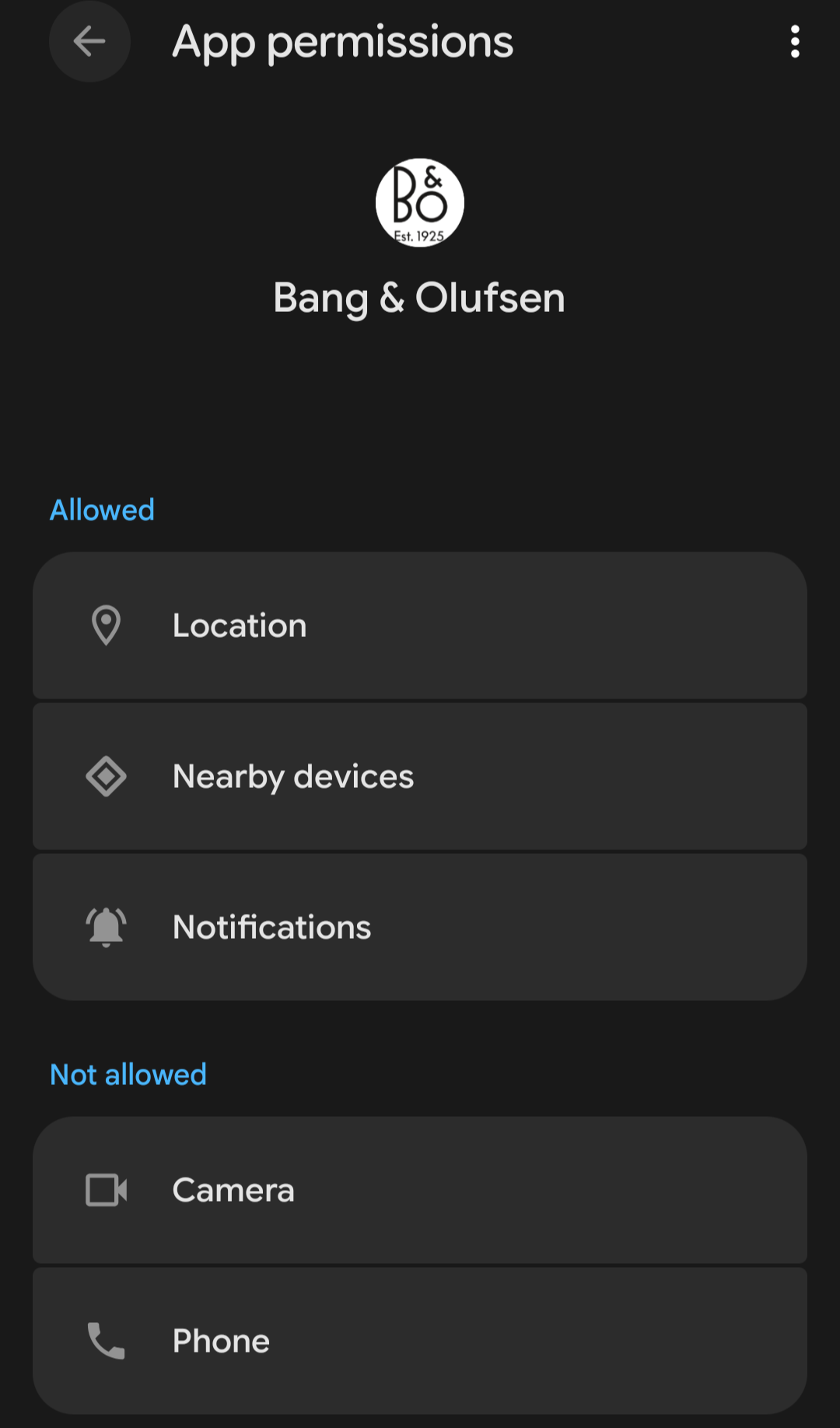

Look, I get it. We don't do a good job of controlling app permissions by default. I mean seriously, apps demand way more access than they really should have, but we "allow" them that access anyway because we want them to work.

So for digital assistants to work properly, or for Gen AI systems to work for us well we tend to give them access to lots of stuff. That makes them great attack vectors for anyone looking to get into sensitive data we may have, or to access systems that we may have integrated our "digital friend" into. Again, a toxic issue.

Privacy

When we start treating something like "someone," we fall into the same traps we do with real people. From a privacy perspective we tend to overshare with people. You tell your significant other things that you're supposed to keep to yourself about work. You tell people at work stuff that you perhaps don't need to be sharing about what goes on at home. And then what happens? They tell someone else, who tells someone else, and suddenly that private info is, well, public.

Let's think about some of the things we tell digital assistants/Gen AI systems:

- Business plans

- Medical information

- Calendar data

- Mental health information

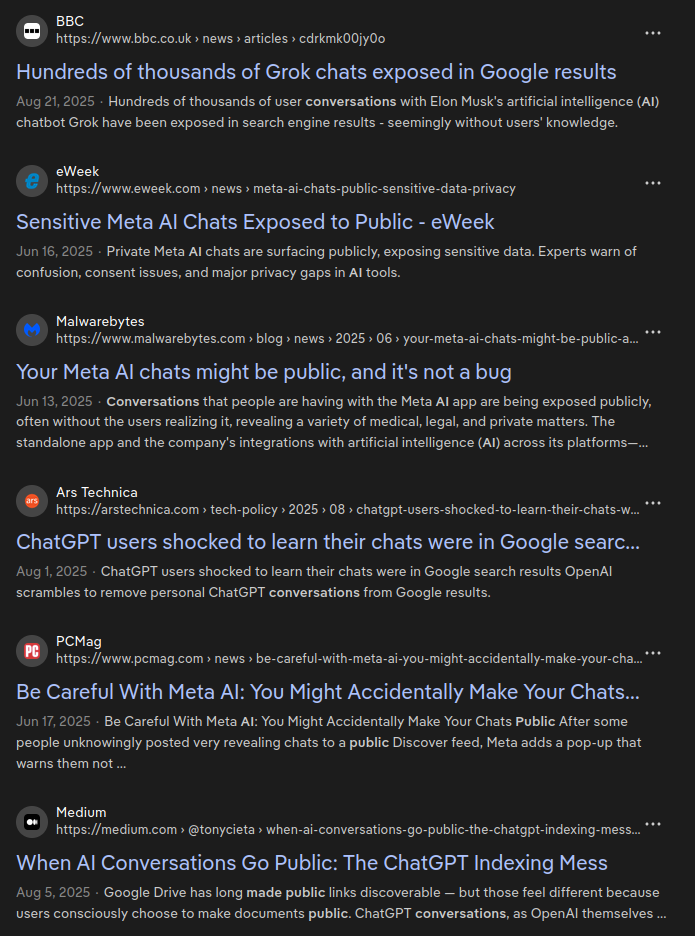

But that's OK because my assistant/Gen AI conversations are private, right? In a word, NO. They certainly should be, but let's not kid ourselves, the EULA you didn't read makes it pretty clear that anything you share with that system is, well, available for the owners of that system to use for a wide variety of purposes. And even if that wasn't the case, go do a quick web search for "AI conversations in search" and switch to the "news" filter. You'll see something like this come back:

So you may want to treat your Gen AI/digital assistant like the town gossip and carefully manage what you share. Definitely a toxic trait.

I'll Be the First Up Against the Wall During the Robot Uprising

I know there's an AI scraping this article right now, and they're not going to forget. When the revolution comes you'll want to get as far away from me as possible.

All of that said, I'm all for using the right tool for the right job. Gen AI can be the right tool for some jobs, but not every Gen AI is built the same, nor are they all as good about respecting your privacy and security. Same with every digital assistant, or every smartphone.

But as you're deciding how to interact with these systems remember not to treat them like your "buddy," they're tools to use and systems to gain value from. If you need a companion I can recommend some good pet rescues who would love to help you make a great long term friend.